A Sneak Peek Inside Databricks Agent Bricks: Building AI Agents with Ease

- hiteshsahni

- Aug 25, 2025

- 7 min read

Updated: Jan 12

Databricks announced a new product “Agent Bricks” at their Data AI Summit in June this year (2025) which is in Beta currently and available only in few US regions on Azure and AWS cloud currently.

Although there has been explosion of LLMs, agentic frameworks, protocols, and various low code/no-code tools for building agents in recent months, building and deploying high quality domain specific production grade AI agents with proper guardrails and high accuracy for enterprise use cases still remains very challenging. I have witnessed this also firsthand in live projects and for real enterprise use cases. Agent Bricks promises to solve these challenges.

"As a Data & AI enthusiast, and on behalf of my company EnablerMinds, we are exploring different AI frameworks and evaluating their pros and cons for real-world projects. I decided to get hands-on with Databricks Agent Bricks to test its capabilities and see if it delivers on its promises—because it only gets real once you roll up your sleeves and try it yourself."

Pre-requisites

In order to use Databricks Agent Bricks, you need to have following things in place:

Databricks Workspace with Unity catalog enabled.

Mosaic AI Agent Bricks Preview (Beta) enabled.

Currently Agent Bricks is available for Azure and AWS (at time of writing this article).

For Azure and AWS, Agent Bricks is available in selected US regions. Check documentation for exact reason.

Workspace used in this demo is setup in Azure EastUS region.

Serverless Compute enabled.

Access to foundation models in Unity Catalog through the system.ai schema.

Access to a serverless budget policy with a nonzero budget.

By default, workspace admin can create serverless budget policies. Non-admins can manage policies if they are assigned Serverless budget policy: Manager permissions.

Remember serverless budget policies are account level resource objects. You need to have billing admin role at account level to manage all policies for given account.

You assign serverless budget policy to one or more databricks workspaces.

Serverless budget policies can be found in account console under “Usage” tab (as shown in screenshot below):

Agent Bricks is not available in new Databricks Free Edition which was announced also at the Data AI summit in June 2025. You can only try it out in premium or enterprise edition Databricks workspace.

How Agent Bricks Works?

Agent Bricks streamlines the implementation of AI agent systems by leveraging declarative approach to defining agent’s task, descriptions and providing data sources and then it handles the rest for you - automates the creation of agents including provisioned infrastructure and model serving endpoint with right models, automate agent evaluation and automatic optimization.

Below is small excerpt from documentation on how agent bricks work behind the scenes for creating your agent and automating evaluation:

Specify your problem

Specify your use case and point to your data.

Agent Bricks automatically builds out the entire AI agent system for you

Agent Bricks will automatically try various AI models, fine-tune them using your data, optimize the systems using innovative AI techniques, and evaluate them to offer you the best built-out systems.

Further refine the system for continuous improvement

When you use Agent Bricks, Azure Databricks will run additional methods and hyperparameter sweeps in the background to continually optimize your model result. If evaluations indicate a different model would achieve better results, Databricks will notify you without additional charge.

If you are working in Databricks ecosystem and have unity catalogs setup with volumes for your unstructured data, then Agent bricks makes it very simple to build production grade agents on your enterprise data.

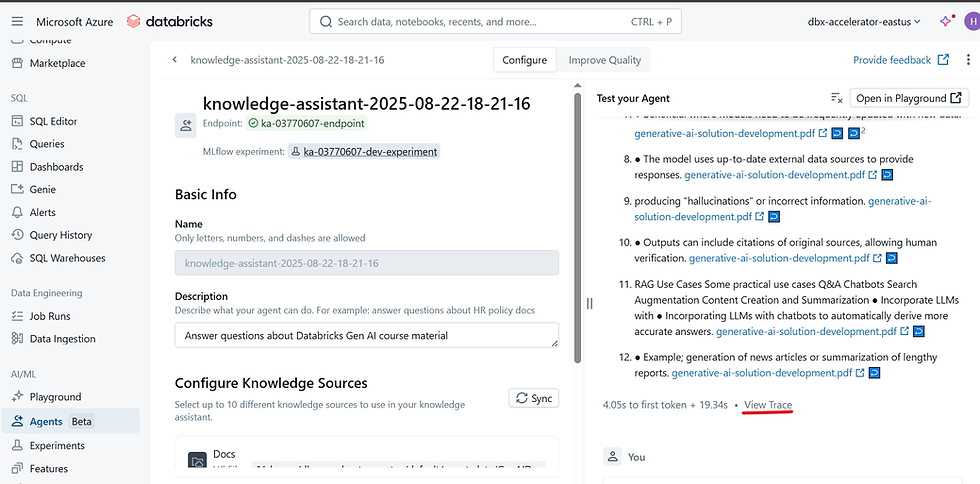

Agentbricks is available via “agents” pane under “AI/ML” section on left side of Databricks workspace:

If you don’t see this then most likely you have not yet enabled in previews.

Go to your workspace → User → Previews:

Search in Preview page for “Mosiac AI Agent Bricks Preview” and Turn on the toggle button next to it if not yet enabled:

AI Use Cases Categories Supported Currently in Agent Bricks

Agent Bricks is optimized for common industry use cases, including:

Use case | Description |

|---|---|

Information Extraction | Transform large volumes of unlabeled text documents into structured tables with extracted information. |

Custom LLM | Custom text generation tasks, such as summarization, classification, and text transformation. |

Knowledge Assistant | Turn your documents into a high-quality chatbot that can answer questions and cite its sources. |

Design a multi-agent AI system that brings Genie spaces and agents together. |

You will find these four use case types when you are in Agent Bricks pane in Databricks workspace:

I believe more use cases will be supported in near future as Databricks continues to build and expand the Agent Bricks capabilities.

Quick Demo - Knowledge Assistant Use Case with Agent Bricks

I played around with Agent Bricks to build quick Knowledge Assistant demo in no code fashion via Databricks UI and followed below steps:

Step 0

Choose your use case from provided menu. I chose Knowledge Assistant in this case.

Step 1

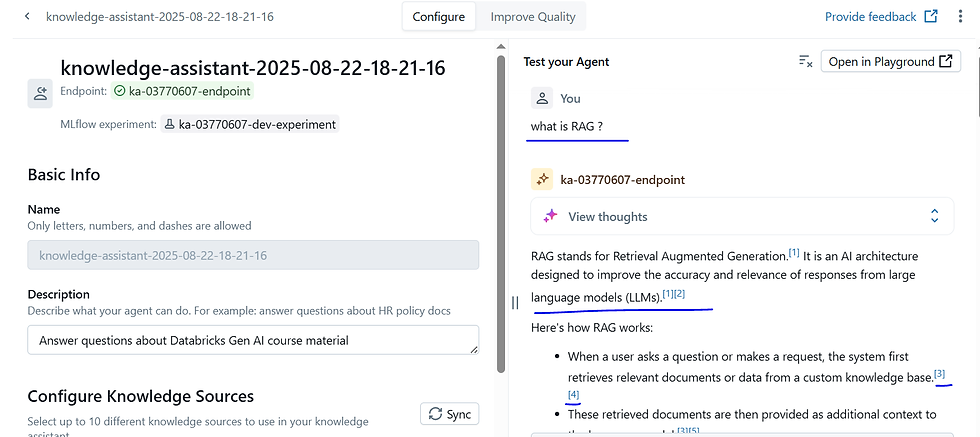

Define agent name and description. Description tells what your agent can do.

Step 2

Provide data sources for your knowledge Assistant. You can choose to provide either Unity Catalog files or a vector search index. In my demo, I choose UC Files.

In order to use UC Files and Agent Bricks, you need to have your data stored in storage account or bucket of your cloud subscription/account and have unity catalog volume pre-created.

UC volume should point to folder where PDFs are stored to be used as knowledge source.

In demo, I built mini Q&A assistant which can answer questions about Gen AI concepts, best practices grounded on provided PDF documents. (PDFs was set of Gen AI course slides).

For UC files, the following file types are supported: txt, pdf, md, ppt/pptx, and doc/docx. Databricks recommends using files smaller than 32 MB.

You can provide up to 10 knowledge sources.

In order to use PDFs in other use cases of Agent Bricks like Information Extraction and Custom LLM, Agent Bricks automatically kicks off a workflow using ai_parse_document to convert PDFs into markdown, stored in a table. This is not required for Knowledge Assistant, which supports PDFs directly as seen above.

After configuring knowledge source, you can also configure optionally the instructions in “Instructions” field. This tells agent about additional things it should take into consideration.

After you click “Create Agent”, it can take up from 30 minutes to few hours depending on amount of sources and other factors to create your agent and sync the knowledge sources you provided. The right-side panel will update with links to the deployed agent, experiment, and synced knowledge sources.

In my case for 4 PDFs, it took around 20-22 minutes for creation of this knowledge assistant/agent.

Testing the Agent

Once the agent is created, you can start asking it the questions and test the response of create knowledge assistant agent.

This agent also provides references to your documents for each answer and even for each point in long answers where it fetched the information from. There is footnote section in the response (after the answer text) where it shares the details of the reference document and associated sentence or paragraph from document.

This increases the relevance and trust towards the answer provided.

I was quite happy with the accuracy of the answers in demo.

Improve quality of responses:

Agent Bricks Knowledge Assistant can adjust the agent's behavior based on natural language feedback. Gather human feedback through a labeling session to improve your agent's quality. Collecting labeled data for your agent can improve its quality. Agent Bricks will retrain and optimize the agent from the new data.

In the Improve quality tab, add questions and start a labeling session. Alternatively, you can also import labeled data directly from a Unity Catalog table.

Databricks recommends adding at least 20 questions for a labeling session to ensure enough labeled data is collected.

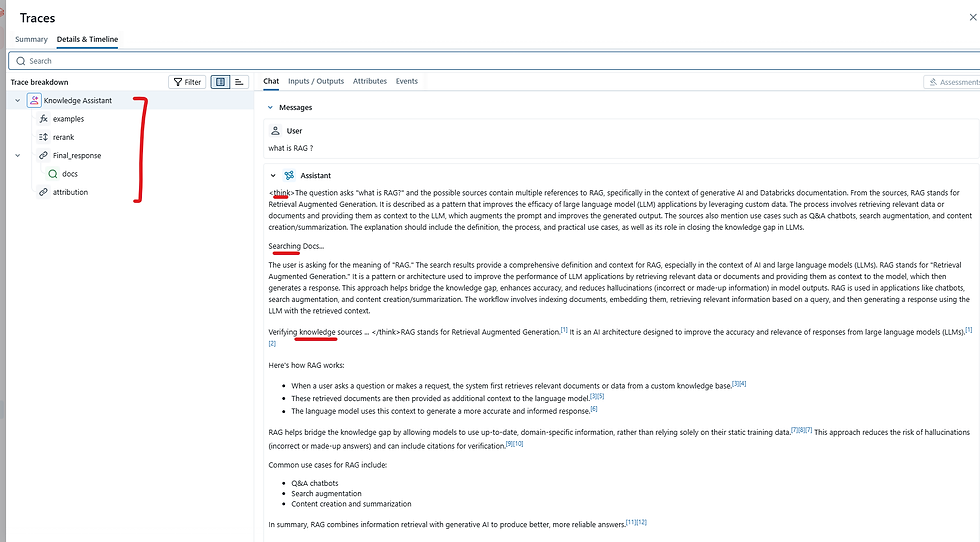

Viewing Traces

One of the good features of Agent Bricks I like is integration with MLFlow 3 where Agent Bricks captures logs, traces, etc. in MLflow Tracing.

You get detailed set of traces in terms of input, output, events, etc.

In my demo, I checked and can see detailed traces that are captured for each interaction with LLM which is very important from compliance and security point of view in companies.

NOTE : Also Agent Bricks leverage MLflow 3 for automatically creating evaluation datasets and custom judges tailored to your task. Sample example below. We will explore this in more detail as this seems to be one key differentiator of Agent Bricks compared to other agent services out there.

RAG Application Spun Up Behind the Scenes

I assumed this knowledge source agent is leveraging RAG based approach behind the scenes and was interested to explore and confirm that understanding.

I can see when explored the underlying setup there is vector search endpoint launched in my Databricks workspace for embedding of data into vectors and also created vector search indexes to store those vectors.

Below are example vector search index and also different delta tables (source data (in binary form), parsed data and chunks):

Automatic Scaling down and Scaling up

Agent Bricks endpoint automatically scales down after 30 minutes of inactivity to save resources and cost.

Endpoint automatically gets started and scaled up when question is asked again, wait time is usually between 30 seconds to 1 minute.

Limitations to know

Databricks recommends using files smaller than 32 MB for your source documents.

Workspaces that use Azure Private Link, including storage behind PrivateLink, are not supported.

Workspaces that have Enhanced Security and Compliance enabled are not supported.

Unity Catalog tables are not supported as source. Only UC volumes (Files) and Vector search Index at this point.

Currently Agent Bricks supports those four use case types only so if you have other use cases outside those categories then it won’t be possible currently.

Example:

Working with structured data from DWH/Lakehouses/SQL DBs for text to SQL insights then Genie or equivalent in-house text to SQL solution might be better option

Conclusion

As agent systems become increasingly central to enterprise operations, relying on “gut feeling” approaches for ensuring accurate responses or actions from LLMs/agents can pose serious risks. From security and compliance challenges to costly business errors, the implications for enterprises are significant. To address this, organizations need a robust and systematic approach to designing and optimizing intelligent agents that can meet the complexity and requirements of real-world business applications.

Instead of getting lost in the overwhelming complexity of agent development, AI engineers should be free to focus on what truly matters: defining an agent’s purpose and guiding its quality through natural language feedback. Agent Bricks takes care of the rest—automatically generating evaluation suites and continuously optimizing agent performance.

This post is a quick primer on Agent Bricks, along with my first hands-on experience building and testing a knowledge agent using custom document data sources. My goal is to equip fellow enthusiasts—especially those on the Databricks platform—with the information needed to start experimenting, building, evaluating, and even productionizing AI agents more effectively.

I hope this guide helps you get started with Agent Bricks. Feedback is always welcome!

In upcoming posts, we’ll be exploring additional use cases within Agent Bricks, including the multi-agent orchestrator—a powerful pattern for building autonomous AI systems that can automate tasks end-to-end. Stay tuned!

References:

Comments